Unsloth - Llama 3.2 Vision Finetuning now supported

Meta's Llama 3.2 vision models are now supported in Unsloth in 11B and 90B sizes.

Vision models are now supported in Unsloth including Meta’s Llama 3.2 (11B + 90B) models. Unsloth makes vision fine-tuning 2x faster and uses up to 70% less memory.

Read our blog: https://unsloth.ai/blog/vision

To train your own custom Llama 3.2 Vision model, we uploaded Google Colab notebooks to fine-tune for free:

Other multimodal models like Llava, Pixtral and Qwen VL are also supported. We uploaded models in GGUF, 16-bit and 4-bit formats. You can also now follow us on Hugging Face.

Gradient Accumulation Bug Fixes

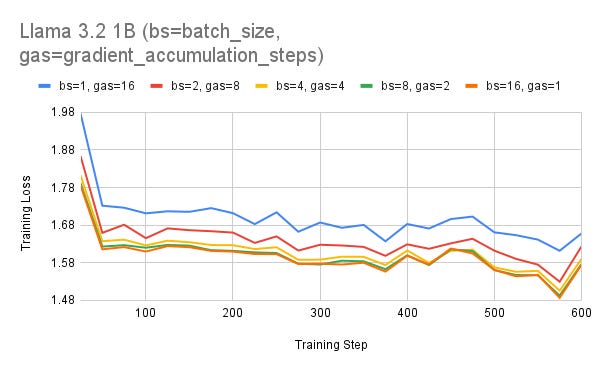

A while ago, we fixed a universal issue in Gradient Accumulation that negatively impacted everyone's training, pre-training & finetuning runs for sequence models like LLMs. Unsloth's Gradient Accumulation fix ensures training runs and loss calculations are performed accurately and correctly.

The goal of gradient accumulation is to mimic full batch training with reduced VRAM usage. Gradient accumulation is also used in DDP and multi GPU setups, so this issue affected large scale training runs as well.

Qwen 2.5 + Coder

Qwen 2.5 Coder (32B) released last week, matched GPT4o's performance on coding. Unsloth makes Qwen 2.5 finetuning 2x faster and use 60% less memory than Flash Attention 2 (FA2) + Hugging Face (HF).

Qwen Google Colab notebooks to finetune on a free Tesla T4:

Thank you!

Thank you for reading and your support. If you have any questions, please reach out to us on Twitter (X) or via support@unsloth.ai.